Update:

Alessandro Padovani pointed out that Blender already has a tool which does this. It is called Bake Action and is found in the Object > Animation > Bake Action menu. Therefore, the new Unwind Action tool has been removed again. The motivation for using it remains the same.

In the options, set Bake Data to Pose. Original Post:

Morphs in DAZ Studio correspond to either shapekeys or driven poses in Blender, or possible to a combination of both. The preferred way to animate morphs is to animate the corresponding rig properties, which is simple enough as long as you stay within Blender. However, driven poses are highly non-portable and not supported by standard export formats such as FBX or Collada. This means that morph animations cannot be readily exported to other applications, e.g. to game engines such as Unity or Unreal.

One way to get around this problem is to convert driven poses to shapekeys, using the Convert Standard Morphs To Shapekeys and Convert Custom Morphs To Shapekeys buttons in the Advanced Setup > Morphs section. This works for most facial morphs, because the face bones are translated and not rotated. However, converting a driven pose to shapekeys has two drawbacks:

1. It is wasteful, because shapekeys take up much more space than poses. The movement of each vertex is stored instead of just the movement of bones.

2. It only works if the bones are translated but not rotated. At intermediate values, a vertex moves along straight lines with shapekeys, but along arcs with bone rotation.

To illustrate the latter point, consider the standard body morph lHandFist.

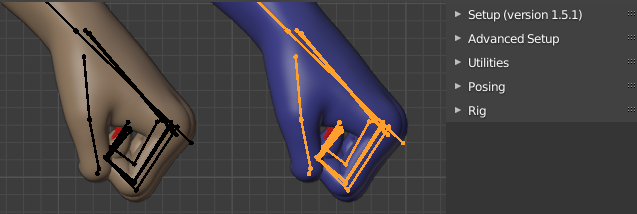

Here is the morph at 0%, 50%, and 100%.And here is the same morph after it has been converted to shapekeys. The extreme values are the same as the driven poses, but the shapekey at 50% is clearly a total mess.

To address this problem and make it possible to export morphs to other applications I introduced a new tool called Unwind Action, which converts an action with driven poses into an action with pure bone transforms and no drivers.

Here we have animated the driven pose lHandFist. (More precisely, it is a pose with name CTRLlHandFist and label lHandFist. In DAZ Studio and in the Body Morph panel the label is displayed, but the Graph and Action editors use the name instead.) The morph starts at value 0 at frame 1, goes up to value 1 at frame 11, and returns to 0 at frame 21.We now invoke the Unwind Action tool.

A new action is created with the name "U_" plus the old action name; U is for Unwinded. Instead of F-curves for the rig properties that drive the pose, it has F-curves for the bones themselves.

If we replace the action of the original armature nothing happens, because the finger bones are still driven by rig properties. But if we import the character again (this time with blue skin) and load the new action, it will move in the same way as the old character, although it does not have any bone drivers.

If we replace the action of the original armature nothing happens, because the finger bones are still driven by rig properties. But if we import the character again (this time with blue skin) and load the new action, it will move in the same way as the old character, although it does not have any bone drivers.Since the unwinded action only involves bone rotations and no drivers, it should work if it is exported to other applications.

This is the first version of this tool. There are at least two improvements that immediately come to mind:

1. Some adjustments are necessary if the driven bones have been made posable with the Make All Bones Posable tool.

2. The same rig property can drive both bone rotations and shapekeys, and that case should be handled too.

In the near future these issue will be addressed.

asdf

asdf

asdf

adsf

asdf