Update August 26, 2022

There has been some improvements since this post was originally written. First of all, the name of the button has changed; it is now called Import Expression rather than Import Morph. This reflects the typical usage: you typically import an expression from a directory called Expressions, and a pose from a directory called Poses. However, both Import Pose and Import Expression can import both poses and expressions.

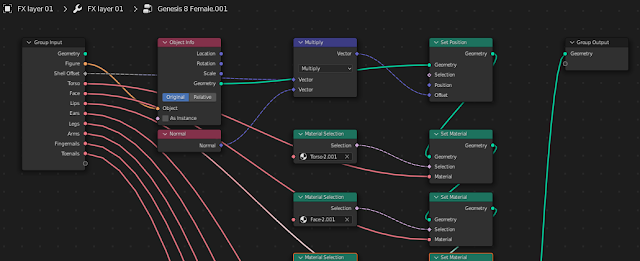

There are now two buttons in the Morphs section:

As mentioned above, the import tool has been renamed to Import Expressions

- Affect Bones: If enabled, poses are imported as well as morphs.

- Clear Morphs:

- Use Scanned Database:

- Check For Updates: Check if the database for the active character needs to be rescanned because new morphs have been installed. Can be disabled if you know that the database is up-to-date.

- Load Missing Morphs:

- Category: Loaded custom morphs are put in this category. Defaults to Loaded.

- Make All Bones Posable: If any loaded morphs has made bones driven.

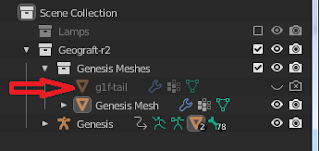

- Affect Geografts:

- Auto Keying: Automatically insert keyframes at the current frame.

Original post:

Morphs lead to problems. On the one hand we want to load lots of them, so they are available when we need them. On the other hand we run into serious performance problems when many morphs are loaded. It takes a long time to load many morphs, and a character with many loaded morphs becomes very sluggish when we animate. Also the user interface lacks visual cues (how do the morphs BEF01, BEF02, etc look?), and in the end we had probably forgotten to load the morph we needed anyway.

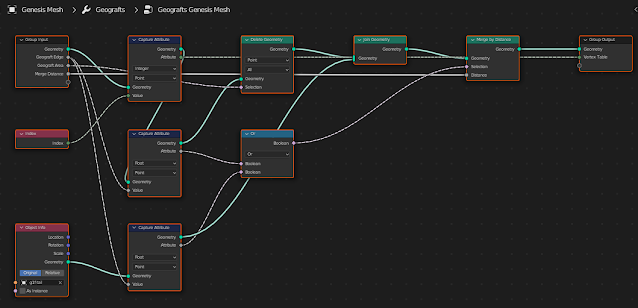

Why there is a performance problem is not a mystery. All drivers have to be recalculated every time the viewport is updated, and there are a lot of drivers if many morphs are loaded. It can be avoided with the Disable Drivers button, but that can only be done while we pose the character, not when we tweak the morphs.

But it doesn't have to be that way. Many custom morphs are simply combinations of basic morphs, either the face units or the FACS morphs, so it should be enough to load those. In the latest development version we can do just that.

Scanning the database can take a long time, but fortunately it only has to be done once. Or every time new morphs have been installed on your system. Take a cup of coffee while the computer works.

Note that the expression is always loaded at 100%. There is no slider that we can adjust if we want to reduce the strength of the morph, but if we loaded the morph with Auto Keying enabled we can achieve similar results by scaling the F-curves.